Optimizing multicore architectures for safety-critical applications

StoryMarch 07, 2019

While multicore processors offer designers of safety-critical avionics the significant benefits of smaller size, lower power, and increased performance, bringing those benefits to safety-critical systems has proved challenging. That’s due mainly to the complexity of validating and certifying multicore software and hardware architectures. Of principal concern is how an application running on one core can interfere with an application running on another core, negatively affecting determinism, quality of service, and – ultimately – safety.

Efforts to ease the safety-critical implementation of multicore processors are underway. Several standards have been updated to address multicore issues.

These include ARINC 653, which covers space and time partitioning of real-time operating systems (RTOSs) for safety-critical avionics applications. ARINC 653 was updated in 2015 (ARINC 653 Part 1 Supplement 4) to address multicore operation for individual applications, which it calls “partitions.” The Open Group’s Future Airborne Capability Environment (FACE) technical standard version 3.0 addresses multicore support by requiring compliance with Supplement 4. Additionally, the Certification Authority Software Team (CAST) – supported by the FAA, EASA, TCCA, and other aviation authorities – has published a position paper with guidance for multicore systems called CAST-32A. Together, these documents provide the requirements for successfully using multicore solutions for applications certifiable up to DAL A, the highest RTCA/DO-178C design assurance level for safety-critical software.

Benefits of multicore

The benefits of a multicore architecture are numerous and compelling:

- Higher throughput: Multithreaded applications running on multiple cores scale in throughput. Multiple single-threaded applications can run faster by each running in their own core concurrently. Optimal core utilization enables throughput to scale linearly with the number of cores.

- Better SWaP [size, weight, and power]: Applications can run on separate cores in a single multicore processor instead of on separate single-core processors. For airborne systems, lower SWaP reduces costs and extends flight time.

- Room for future growth: The higher performance of multicore processors supports future requirements and applications.

- Longer supply availability: Most single-core chips are obsolete or close to obsolete. A multicore chip offers a processor at the start of its supply life.

Challenges for multicore in safety-critical applications

In a single-core processor, multiple safety-critical applications may execute on the same processor by robustly partitioning the memory space and processor time between the hosted applications. Memory-space partitioning dedicates a nonoverlapping portion of memory to each application running at a given time, enforced by the processor’s memory management unit (MMU). Time partitioning divides a fixed-time interval, called a major frame, into a sequence of fixed subintervals referred to as partition time windows. Each application is allocated one or more partition time windows, with the length and number of windows being factors of the application’s worst-case execution time (WCET) and required repetition rate. The operating system (OS) ensures that each application is provided access to the processor’s core during its allocated time. To apply these safety-critical techniques to multicore processors requires overcoming several complicated challenges, the most difficult being interference between cores via the shared resources.

Interference between cores

In a multicore environment, each processing core has limited dedicated resources. All multicore hardware architectures also include shared resources, such as memory controllers, DDR memory, I/O, cache, and the internal fabric that connects them (Figure 1). Contention results when multiple cores try to concurrently access the same resource. This situation means that a lower criticality application/partition could keep a higher criticality application/partition from performing its intended function. In a quad-core system, with cores only accessing DDR memory over the interconnect (i.e., no I/O access), multiple sources of interference from multiple cores have shown increases in WCET more than 12 times. Due to shared resource arbitration and scheduling algorithms in the DDR controller, fairness is not guaranteed and interference impacts are often nonlinear. In fact, tests show a single interfering core can increase WCET on another core by a factor of 8.

Figure 1: Separate processor cores (in gray) share many resources (in green) ranging from the interconnect to the memory and I/O.

(Click graphic to zoom)

|

CAST-32A provides certification guidance for addressing interference in multicore processors. One approach is to create a special use case based on testing and analysis of WCET for every application/partition and their worst-case utilization of shared resources. Special use case solutions, though, can lead to vendor lock and reverification of the entire system with the change of any one application/partition, making that approach a significant barrier to the implementation and sustainment of an integrated modular avionics (IMA) system. Without OS mechanisms and tools to support the mitigation of interference, sustainment costs and risk are very high. Changes to any one application will require complete WCET reverification activities for all integrated applications.

The better approach is to have the OS effectively manage interference based on the availability of DAL A runtime mechanisms, libraries, and tools that address CAST-32A objectives. This provides the system integrator with an effective, flexible, and agile solution. It also simplifies the addition of new applications without major changes to the system architecture, reduces reverification activities, and helps eliminate OEM vendor lock.

Porting single-core software designs to multicore

While porting an existing safety system to a multicore platform provides more computing resources, the WCET of a given application can increase due to longer latency to access shared resources or interference from other cores. New analysis is needed to determine if other resources such as memory, memory controllers, and intercore communications can become a new bottleneck. While resources may run faster, changes in relative performance can cause an application to stop working or behave in a nondeterministic manner.

Effective utilization of multicore resources

To achieve the throughput and SWaP benefits of multicore solutions, the software architecture needs to support high utilization of the available processor cores. All multicore features must be supported, from enabling concurrent operation of cores (versus available cores being forced into an idle state or held in reset at startup) to providing a mechanism for deterministic load balancing. The more flexible the software multiprocessing architecture, the more tools the system architect has to achieve high utilization. [1]

Software multiprocessing architectures

Like multiprocessor systems, the software architecture on multicore processors can be classified by how memory from other processors or cores is accessed and whether each processor or core runs its own copy of the OS. The simplest software architecture for a multicore-based system is asymmetric multi-processing (AMP), where each core is run independently, each with its own OS or a guest OS on top of a hypervisor. Each core runs a different application with little or no meaningful coordination between the cores in terms of scheduling. This decoupling can result in underutilization due to lack of load balancing, difficulty mitigating shared resource contention, and the inability to perform coordinated activity across cores such as required for comprehensive built-in test.

The modern alternative is symmetric multi-processing (SMP), where a single OS controls all the resources, including which application threads are run on which cores. This architecture is easy to program because all cores access resources “symmetrically,” freeing the OS to assign any thread to any core.

Not knowing which threads will be running on which cores is a major challenge and a risk for deterministic operation in critical systems. To address this issue, CAST-32A references the use of bound multi-processing (BMP). BMP is an enhanced and restricted form of SMP that statically binds an application’s tasks to specific cores, enabling the system architect to tightly control the concurrent operation of multiple cores. BMP directly follows the multicore requirement in ARINC 653 Supplement 4 section 2.2.1, which states: “Multiple processes within a partition scheduled to execute concurrently on different processor cores.”

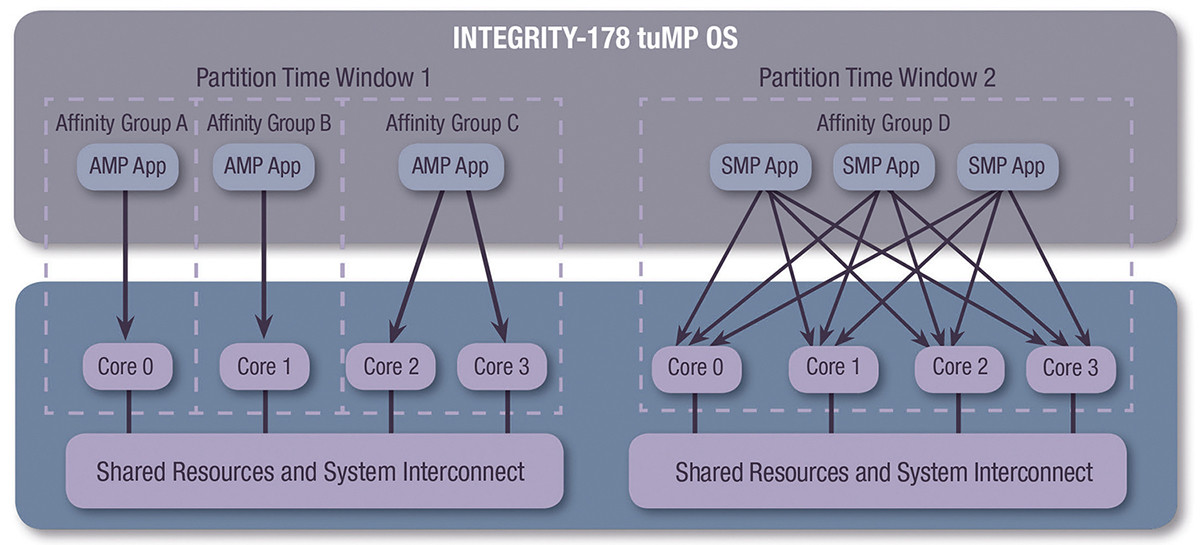

An example of a multicore RTOS for safety certification is the Green Hill’s INTEGRITY-178 tuMP, is a unified multicore RTOS that supports simultaneous combinations of AMP, SMP, and BMP. The RTOS’s time-variant unified multi-processing (tuMP) approach provides flexibility for porting, extending, and optimizing safety-critical and security-critical applications to a multicore architecture. It starts with a time-partitioned kernel running across all cores that allows any combination of AMP, SMP, and BMP applications to be bound to a core or groups of cores called affinity groups (Figure 2).

Figure 2: The time-variant capability of INTEGRITY-178 TuMP enables different binding of applications to cores in different partition time windows.

(Click graphic to zoom)

|

It then adds time variance so that partition time windows do not need to be aligned across cores. INTEGRITY-178 tuMP also includes a bandwidth allocation and monitoring (BAM) capability, developed to DO-178C DAL A objectives. BAM is intended to enable system integrators to identify and mitigate interference on multicore-based systems, directly addressing the CAST-32A guidance and lowering integration and certification risk.

The hardware perspective: DO-254 certifiable multicore hardware

In addition to the DO-178 software, full safety certification of an aircraft also requires DO-254 certification for the hardware. Current and emerging aerospace requirements demand hardware processing capability that can support multiple functions and applications with mixed levels of safety criticality. These requirements, along with intense computational needs and architectures that include multicore processors, highlight a very clear and pressing need for RTOS technology capable of preventing performance degradation and shared resource contention.

Hardware architectures that include multicore processing technology must be deliberately designed to set the number of active cores and the execution frequency, to specify which MCP peripherals are activated, and to determine the hardware support for shared memory and cache. In safety-critical applications, a multicore processor must be carefully selected and its host board architected based on several key factors, including a processor’s service history, availability of manufacturing and quality data, I/O capabilities, performance levels, and power consumption.

An example of a single board computer (SBC) designed to support safety-critical multicore applications is Curtiss-Wright’s VPX3-152 based on the NXP QorIQ T2080 Power Architecture processor (Figure 3). The quad-core T2080 meets the performance requirements of many DAL A applications at a relatively low level of power consumption. The T2080’s 16 available SerDes lanes effectively double the number of functions that can be directly serviced from the processor, thereby simplifying the overall board design and certification effort.

Figure 3: The VPX3-152 SBC is designed to support safety-critical multicore applications. The quad-core T2080 processor meets the performance requirements of many DAL A applications while using relatively low levels of power.

(Click graphic to zoom)

|

The full capability of these multicore processors is realized when complemented by an RTOS that enables system designers and integrators to utilize all available compute power from the processor’s cores in a high-assurance manner. Use of a safety-critical, multicore SBC and an RTOS that provides deterministic, user-defined core and scheduling assignments can ensure that the performance capabilities of multicore hardware are fully achieved.

Notes

[1] This topic is specifically discussed in ARINC 653 Part 1 Supplement 4 section 2.2.1 as multiple processes (i.e., threads) within a partition executing concurrently across multiple cores and as concurrent partition execution.

Rich Jaenicke is director of marketing for Green Hills Software. Prior to Green Hills, he served as director of strategic marketing and alliances at Mercury Systems, and held marketing and technology positions at XCube, EMC, and AMD. Rich earned an MS in computer systems engineering from Rensselaer Polytechnic Institute and a BA in computer science from Dartmouth College.

Rick Hearn is the product manager for safety-certifiable solutions for Curtiss-Wright Defense Solutions. Rick has over 25 years of experience in design and design management positions in the telecommunications and defense industries, including 11 years of experience in design management and program management at Curtiss-Wright Defense Solutions.

Green Hills Software

www.ghs.com

Curtiss-Wright Defense Solutions

www.curtisswrightds.com