VITA 65, serial fabrics, and HPEC: Decisions, decisions

StoryOctober 10, 2012

Organizations going the VITA 65/OpenVPX route as part of a planned HPEC deployment have an important decision to make in terms of choice of serial switched fabrics. The decision is based, however, not only on technical specifications and performance, but on other factors as well ? factors that are potentially more critical.

When VITA 65 – OpenVPX – was ratified by ANSI in June 2010, it turned the technical promise and potential of the VITA 46 (VPX) standard into a commercial reality. Where VPX was focused at the board level, OpenVPX created a framework for turning boards into useful systems. The relationship between the two is perhaps best characterized as: VPX provided the ingredients – but VITA 65/OpenVPX provided the recipe.

Fundamental to the OpenVPX philosophy was the idea of interoperability – of fostering an ecosystem of VPX hardware and software from multiple vendors that was guaranteed to be able to play nicely together. Competition between those vendors would help drive prices down, while a burgeoning infrastructure of complementary products and services could help minimize program risk and time-to-market.

But while VITA 65 answered many of the questions that VITA 46 had implicitly posed, there were others that it – intentionally – did not. A significant element of the attraction of VITA 46 and VITA 65 lay in their flexibility. Program managers planning to take advantage of what VITA 65 offer are still left to answer questions – and none of these is more fundamental than the choice of serial switched fabric, the technology at the heart of VPX.

That choice is, however, about much more than relative performance. Other factors are as important – and perhaps more so.

Those questions have become even more pressing as increasing numbers of military programs look toward what High Performance Embedded Computing (HPEC) can do for them. HPEC takes the principles of High Performance Computing (HPC) – massed ranks of powerful servers operating in parallel on the world’s toughest processing tasks – and brings them to the world of embedded computing; this brings game-changing levels of performance to deployed embedded platforms using Modular Open System Architectures (MOSA), an architectural approach enabled by VITA 65.

In conjunction with other open architectures and middleware such as Future Airborne Capability Environment (FACE), Open Fabric Enterprise Distribution (OFED), and OpenMPI, OpenVPX provides a compelling proposition. Take, for example, the processing of sensor-acquired data. “Behind” the sensors, DSP and image processing algorithms extract and track targets, and the applications apply powerful algorithms to deduce identities and patterns and deliver actionable graphic information to the screen – in the minimum possible time. It’s the kind of demanding application that lends itself readily to the parallelism that is possible with multiple processors including multicore processors and many-core Graphics Processing Units (GPUs).

High-speed communication between those processors is essential in HPEC applications – and that’s the province of serial switched fabrics. But which is best? 10 GbE? PCI Express? InfiniBand? Serial RapidIO? Let’s consider their differences.

10 GbE, PCIe, InfiniBand, and Serial RapidIO in depth

10 GbE, PCI Express, Serial RapidIO, and InfiniBand (Figure 1) are all part of a class of interconnects that uses switched serial fabrics. These are point-to-point connections that are typically (but not always) directed through one or more switches. Smaller systems can be constructed without switching where either all-to-all connection is not needed – say a pipeline dataflow – or the number of endpoints is not more than the number of fabric ports per endpoint plus one. (That is, if a board has two fabric ports, three of them can be connected with a full mesh without a switch.)

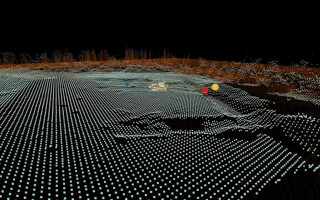

Figure 1: While performance characteristics are important, there are other considerations in choosing a serial switched fabric.

(Click graphic to zoom by 1.9x)

There are many similarities between the different fabrics; in fact, most of them share the same electrical characteristics but vary in the protocols that are used to convey the data. Because of this, the raw transmission rates are generally comparable, making any of them a potential candidate for an HPEC implementation. Where they differ is in the higher protocol levels. Each is constructed from a number of “lanes” ganged together, where a lane is two differential signaling pairs occupying four physical wires or traces. One pair transmits data, while the other receives in a full-duplex link. The signal clock is embedded in the data stream, which mitigates some of the restrictions seen in parallel buses as signal frequencies and transmission lengths increase. Most of the protocols use a form of encoding that is designed to reduce DC bias on the lines and to provide enough edge transitions to allow efficient clock recovery. The most common scheme is 8b/10b where the 8 bits that comprise a byte of data are coded to 10 bits for transmission. This places a 25 percent overhead burden on the data transmission. Some newer protocols have adopted 64b/66b coding with a more efficient 3.125 percent overhead or 128b/130b, which has half of that overhead.

There are several clock rate options, but for OpenVPX systems using the current connectors and backplane construction, the most commonly seen ones are 3.125 GHz for 10 GbE and 5 GHz for the other three. So, for a typical x4 backplane link, all three 5 GHz fabrics produce comparable results. As InfiniBand and Serial RapidIO are designed for message passing schemes, they tend to be used on the OpenVPX data plane.

PCI Express is most often used to connect peripherals to processors (as is implied by its full name – Peripheral Component Interconnect Express) and so shows up most often on the expansion plane. PCI Express can be used as a peer-to-peer interconnect, but several factors can complicate this. The PCI Express architecture assumes one root node that is tasked with enumerating the fabric. Connecting several processors in this manner usually requires employing nontransparent bridging to separate out each node into its own domain. The domains are then connected with mapping windows. Typically, these windows are limited in size and number, and this tends to be the restricting factor in how many nodes can be interconnected. This can work fine for smaller clusters, but for larger systems, Serial RapidIO and InfiniBand offer better scalability. 10 GbE yields slightly lower bandwidth because of the lower clock rate, but with RDMA technology, it is comparable in latency and processor overhead and benefits from ubiquity and user familiarity.

So, when it comes to HPEC applications: Why do we care so much about interconnect bandwidth? One reason is that, as processor speeds increase – or as is prevalent these days, more cores are included per processing node – the interconnect scheme needs to follow suit in order to build multiprocessor systems that actually scale application performance (Figure 2). It is no use adding processors if they are starved for data. Locality of data can become increasingly important. In other words, the further a processor has to reach for data, the slower the data transfers are. The closest locations are the caches, then local memory, and finally data located on other processors connected by the network fabric.

Figure 2: GE’s NETernity GBX460 data plane switch module supports HPEC cluster architectures.

(Click graphic to zoom by 1.9x)

Performance isn’t everything

But, increasingly, the argument is no longer about “feeds and speeds.” Each serial switched fabric has, inevitably, its strengths and weaknesses – but these are not always about performance. Oftentimes, the application may demand that one is preferred to another. 10 GbE, PCI Express, InfiniBand, and Serial RapidIO all have their place.

Despite some – largely inaccurate – assertions to the contrary, there is little difference among the various serial switched fabrics in performance terms. More important than a faster clock speed or a higher bandwidth, there are likely to be those same commercial considerations that saw the development of VITA 65/OpenVPX in the first place: Much, much more important to today’s military embedded computing programs is the minimization of cost, risk, and time-to-market – and that points to the ready availability of a broad range of potential suppliers, tools, software, support, and expertise.

While PCI Express has the required supporting infrastructure, it is not ideal as a contender for scalable peer-to-peer interboard communication simply because, architecturally, that’s not what it was designed to do nor where its strength lies. That leaves 10 GbE, InfiniBand, and Serial RapidIO. The latter fails both the test of interoperability with the GPGPU technology that is a fundamental element of many HPEC implementations, and the test of the widespread commercial support within the HPC cluster computing market that is central to harnessing the full potential of VITA 65 MOSA platforms. Serial RapidIO is typically found within the commercial communications infrastructure market, notably in Power Architecture environments, and some specialized DSP devices in which it is natively supported.

InfiniBand, on the other hand, brings with it a strong market presence within the world’s TOP500 (www.top500.org) HPC platforms along with wide community support in the form of tools, middleware, and know-how. It coexists well with the Intel architectures that are now assuming increasing significance in military embedded computing. It is also a natural partner for GPGPU technology: NVIDIA and Mellanox have worked together to develop an efficient mechanism that allows InfiniBand to deliver data directly to the GPGPU’s processor memory – rather than incurring the performance overhead of delivering the data first to the host processor’s memory before transferring it to the GPGPU, as would be the case with other switched fabrics.

Which leaves 10 GbE. Benefiting from the widest possible commercial and technology support, it is an obvious choice – where it can deliver the required performance for the customer application – for both interprocess communication in multi-CPU systems and for I/O from sensors to the data processor and on out to the user interface. For users requiring higher levels of performance in interprocessor communication, InfiniBand is superior, benefiting from the fact that it was not designed as a general-purpose communications technology, but as a flexible, fast, and scalable fabric specifically for this type of task. Hence, 10 GbE and InfiniBand are highly complementary (a fact seemingly reinforced by acquisitions by Intel) and provide the optimum fabric choice for VITA 65 platforms and for HPEC applications.

Michael Stern is a Senior Product Manager at GE Intelligent Platforms, where he is responsible for the company’s High-Performance Embedded Computing (HPEC) product line. He has spent 20 years within the defense and aerospace market. He can be reached at [email protected].

GE Intelligent Platforms defense.ge-ip.com