Explainable intelligence is on the way for neural networks

StoryJune 10, 2019

Neural networks are on the path toward explainable intelligence, which may help speed the adoption of artificial intelligence (AI) by the U.S. Department of Defense (DoD).

The U.S. Department of Defense (DoD) is prioritizing the fielding of artificial intelligence (AI) systems to help augment the capabilities of warfighters by offloading tedious cognitive or physical tasks and introducing more efficient ways of working.

The 2018 DoD AI Strategy states: “The United States, together with its allies and partners, must adopt AI to maintain its strategic position, prevail on future battlefields, and safeguard this order. We will also seek to develop and use AI technologies in ways that advance security, peace, and stability in the long run. We will lead in the responsible use and development of AI by articulating our vision and guiding principles for using AI in a lawful and ethical manner.”

Neural networks are one realm of AI actively being explored for future DoD use; the Defense Advanced Research Projects Agency (DARPA) is working on a program called Explainable Artificial Intelligence (XAI), which has as its goal the development of neural networks capable of explaining why they make their decisions and showing users the data that plays the most important role in its decision-making process.

Neural nets

Bill Ferguson, lead scientist at Raytheon BBN and principal investigator on DARPA’s Explainable AI program, gives an inside look at the concept and use of neural nets.

Neural networks got their start back in the 1960s, with the first ones simply a bunch of electrical components connected via wires and knobs that could be adjusted to make them do the right thing. “We’re doing the same thing now – just hundreds of billions of times faster, bigger, and better,” Ferguson says. “But it’s the same basic idea.”

A neural network is essentially a computer program with hundreds of millions of virtual components connected by virtual wires. These virtual wires have different connection strengths.

“In this great big network, some wires are considered to be the input components,” he explains. “On the far end of the network, with a bunch of wires in between, are the output components. It looks vaguely like a human or animal brain, which is why it’s called a neural network. Within the brain, components, namely the neurons, are connected together by the axons and dendrites of the neural cells.” Those have various connection strengths, depending on what the brain has learned.

Neural nets consist of layers or stacks, which are analogous to the human brain. “Our partners at MIT have done work exploring what’s going on within these big stack systems and it turns out that the neurons very close to the pixels on the input side are recognizing things like colors, textures, and edges,” Ferguson says. “The ones higher up in the network – closer to where it’s about to make its decision – are recognizing things like wheels, feet, eyes, etc. Just like a human brain, the layers are meaningful. The lower layers are doing very simple things to recognize objects, while the higher layers take that data and put it together into more complex things like legs and arms or whole objects.”

Training neural nets

To train a neural net, “you take the kinds of input it might see, like a picture, and attach the pixels to the pictures to the neurons on the input side of the system. On the output side, you have all of the possible answers that it might produce,” Ferguson says.

Imagine you want the system to label an image for you. It has a neuron on the outside for a dog, wrench, and bus, and a neuron for all of the things that it might want to label the image. “You train the system by literally showing it an image hundreds of billions of times and looking to see how the neurons on the outside are lighting up,” he adds. “Show it an image –you know what it is – of a dog. Then you look to see how lit up the dog neuron is and you can strengthen all of the different connections in this big jumble of connections that will make it more likely for it to be a dog. But you just strengthen it a tiny bit – and you keep doing it a bunch of times with labeled data – so that eventually when you show it a dog, the dog neuron lights up brighter than any of the others. And if you show it a cat, the cat neuron lights up brighter than any of the others.”

This simple process – if it has huge amounts of data – can do extremely well at labeling images. “It can do as well as humans in terms of how often it gets them right or wrong,” Ferguson says.

Most of the code in a big AI system “has to do with handling the data or translating the pixels into the right format, or if it’s text, dealing with the different kinds of codes for the way the text is formed,” Ferguson says. “The little pieces that do the learning and then make decisions at the end are usually only about two or three pages each. The code that actually makes the magic – both the learning and deciding – is a very small bit of code.”

While neural networks started out with a ton of code in the 1960s, in modern machine learning (ML) systems it’s just a little bit of code combined with a lot of data. “The trick is that it’s got a lot of data to learn from,” he adds. “There’s a very simple algorithm that figures out which things to reinforce when it wants to get a certain answer. And the rest of it just happens.”

What can neural networks do?

Neural networks can take on problems with two key characteristics: First, it’s well-defined what the system should look at each time it needs to do its job: a movie or a picture or even a big set of pictures. Second, the type of answer it should give must also be well-defined. For example, it should label it and either approve or not approve, or it should translate it into another language. “So you need a well-defined problem and a ton of data, typically labeled data,” Ferguson says. “Literally tens of millions of examples of where the problem has been solved correctly that it can look at it and learn from.”

Extremely competent systems today use enormous piles of labeled data. Self-driving cars, for example, are learning via hundreds of millions of hours of humans driving cars. “They put a camera in front of the car so you can see outside the car and they record everything the human driver does,” Ferguson says. “They try to get the neural net to imitate that behavior. Once it does, they put it through simulated worlds where they know the right thing to do because they’re running the simulator. Finally, they try it out in the real world. But there’s a huge need for labeled data. That’s why a lot of expert human behavior isn’t possible to do right now – because we don’t have huge bunches of labeled data being done correctly.”

Creating massive amounts of labeled data can be somewhat of a privacy issue, however; in other cases, data isn’t even being stored yet. “There are lots of mail carriers delivering mail right now, but we’re not taking careful video of them opening and closing mailboxes and looking at the letters to figure out where the letters go,” Ferguson points out. “If we were going to build robot mail deliverers, we’re a long ways from it because we’re not even gathering the data right now that you’d need to train them. There are huge obstacles to gathering that data: political, logistical, and computational. It’s really difficult.”

Challenges of neural networks

Neural networks have come a long way, but they still face barriers to use. Until recently, the biggest hurdle was to get them to perform well enough to prove their usefulness.

“Until about 10 years ago, neural networks were sort of a curiosity,” Ferguson says. “It’s been hard to get enough data and computational power to these systems. They’re typically trained in the cloud now, where a few, sometimes hundreds, of computers will look at all of this training data as the system is becoming competent. Once the system is trained, it doesn’t require anywhere nearly as much computation to make it do its job – show it a new image and have the system label it. You just present the image to the input side of the neurons, the signal strength flows through the net, and it makes its decision on the output side. It does this fast, usually within a few thousandths of a second.”

So neural nets have become competent within the past 10 years. But can you actually find enough big data yet for any particular problem you’d like to solve? This is a major limitation of the system: “There are a whole bunch of labeled images on the Internet, thanks to Google and all of the search engines that can find this stuff,” Ferguson notes. “So neural nets have become really good at captioning images, labeling images, translating language, but it needs piles and piles of examples of a job done right.”

Another problem, Ferguson observes, is that neural nets don’t tell you why they make the choices they do. “Hundreds of thousands of neurons in the system are simulated and passing little signals to each other, and you can say, ‘Wow, it learned,’ but we have no idea why or how it’s decided that this particular picture of a zebra is a zebra,” he says. “We’re trying to figure out why it happens and to explain it to people. It’s interesting to be building systems without entirely understanding how they work. We’ve got our little algorithm that trains them, but we really don’t know everything it’s doing inside to make the tasks possible.”

On the path to “explainable AI”

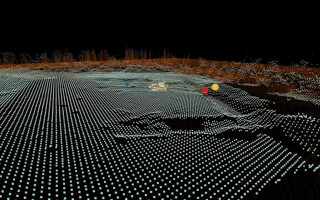

Neural networks have reached the point where, to move forward, “explainable AI” must play a critical role. This is where DARPA’s XAI program comes in (Figure 1). It’s attempting to create a suite of ML techniques that both produce more explainable models and a high level of performance. “Explainable AI will increase trust in the system and humans’ ability to know when they should use the system or when it might be best not to rely on an automated system,” Ferguson says.

Figure 1 | DARPA’s XAI, or Explainable AI, program is aimed at creating a suite of machine learning (ML) techniques that produce better-performing, more-explainable models. Courtesy of DARPA.

|

|

Right now, the program is still in the basic research stage. “We’re literally working with pictures of cats, dogs, buses, and pictures of streets,” he adds. “We’re publishing all of our research. DARPA does this sort of thing when it’s trying to make progress and wants to see what’s possible. Much later on, we’ll potentially be looking at DoD applications, but we’re not there yet with this program.”

The end goal of the entire endeavor: Building trust via explainable AI.