PCI Express switches to P2P in OpenVPX

StoryOctober 10, 2013

Of all the serial switched fabrics in OpenVPX systems, PCI Express (PCIe) has perhaps the least sex appeal. PCI has, after all, been at the heart of PC architectures from time immemorial – and its offspring, PCI Express, continues to play a key role in modern PCs. But for OpenVPX systems designers in the know, PCIe’s an invaluable asset – especially since an important limitation has been addressed: The Peer-To-Peer (P2P) performance challenge.

Of all the serial switched fabrics in OpenVPX systems, PCI Express (PCIe) has perhaps the least sex appeal. PCI has, after all, been at the heart of PC architectures from time immemorial – and its offspring, PCI Express, continues to play a key role in modern PCs. But for OpenVPX systems designers in the know, PCIe’s an invaluable asset – especially since an important limitation has been addressed: The Peer-To-Peer (P2P) performance challenge.

Meeting the P2P challenge

In the OpenVPX world, PCIe is a compelling fabric choice, especially for 3U boards using Intel processors. One reason is that PCIe is native to Intel chipsets – it’s their mother tongue. Thus, in a sense, it comes for free. Because it’s native to these chips, it can be implemented with less silicon than is typically required by other fabrics, minimizing footprint and power penalties.

Adding a network interface controller to implement 10 GbE, Serial RapidIO, or InfiniBand on a 3U SBC might force a trade-off with another desirable feature. The integrated chipset in a single-processor SBC, however, can buy some PCIe connectivity. Chipsets typically can provide up to five unique PCIe paths to the respective peripherals.

PCIe is a potentially attractive option for the heavy lifting, OpenVPX data plane, as well as for the control plane and the expansion plane. PCIe can connect a processor to a peripheral – often via the expansion plane – at high data rates. It can provide I/O from 1 to 16 lanes – ultra thin pipe to quad fat pipe – yielding raw bandwidths, at the high end (8 GBps), that are three to four times higher than that of other fabrics such as DDR InfiniBand and Serial RapidIO.

The downside to PCIe in OpenVPX has been its performance in P2P architectures, where a fabric connects two or more processors. P2P connectivity is becoming more important in today’s High Performance Embedded Computing (HPEC) applications, such as sensor processing, where multiple CPUs, peripherals, and high-powered chips such as FPGAs might be linked together in one 3U chassis.

Enter NTB

As mentioned, in its natural state, PCIe expects one processor to be connected to one or more peripherals. Internal PCIe switching via processor chipsets cannot support multiprocessor connectivity. But this challenge now has been overcome, thanks to the invention of Non Transparent Bridging (NTB), a technology that allows two or more processors to communicate with each other on PCIe. NTB permits some links to be hidden from CPUs in the discovery process. Thus, a second processor – and its peripherals – can be hidden from the first processor and its peripherals to avoid conflicts. A “window” in the switch, meanwhile, can be manipulated in software as a communication portal between processor domains.

NTB can be implemented via a PCIe switch added to a processor card or through a separate switch card. Newer external PCIe switch cards incorporate NTB and P2P driver packages, making it easier for programmers to use the fabric to support multiprocessor systems. Newer switch cards can also perform other functions, such as PMC/XMC hosting, helping to drive down system Size, Weight, and Power (SWaP) and reduce the thermal load on the SBC.

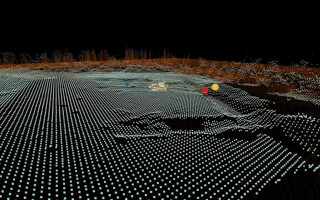

An example of a highly flexible switching device that supports NTB technology and P2P architectures is the GE Intelligent Platforms PEX431 (Figure 1), an OpenVPX, multifabric (PCIe and GbE) multiplane switch and XMC mezzanine card, with built-in DMA, multicast, and fail-over mechanisms, designed to interconnect up to six endpoints – processors or other devices – in 3U systems.

Figure 1: The GE Intelligent Platforms PEX431 multifabric switch and PMC/XMC carrier card allow VPX PCI Express peer-to-peer connectivity.

(Click graphic to zoom by 1.9x)

Virtues of external NTB/P2P switching

To be sure, adding an external switch card to a system means taking up another slot in the chassis. This trade-off won’t work for everyone. But for some applications, if the switch card is more than the sum of its parts, it could produce system-level SWaP advantages.

Designers of small OpenVPX systems with limited growth potential might choose to add PCIe switches to their SBCs to avoid consuming an extra slot for switching. But designers of larger OpenVPX systems with growth expectations might be wise to choose a PCIe switch card, especially if it also supports NTB/P2P, multiple fabrics and planes, and can offer thermal load sharing for power-hungry XMC devices.

defense.ge-ip.com