Inside the mind of machines: AI modeling scales security, analytics on the Industrial Internet

StoryJune 16, 2016

The advent of the Industrial Internet has raised the bar for security analysts and data scientists, a workforce whose number is quickly being dwarfed by the amount of connected machines. Now, machine learning and artificial intelligence professionals are teaming with traditional embedded vendors to help suppress the rising tide of cyber threats and Big Data.

On their website, cyber security firm Norse Corporation generates a detailed, real-time map of cyber attacks occurring around the world, including the attack origin, attack type, and attack target (Figure 1). Hundreds of attacks are registered in any given minute, which, while disconcerting, pales in comparison with the number of systems being connected to the Industrial Internet.

![Norse Corporation produces a real-time map of worldwide cyber attacks, which calculates hundreds per minute.]](https://data.militaryembedded.com/uploads/articles/wp/5511/57633098ef650-Figure+1-backup.png) [Figure 1 | Norse Corporation produces a real-time map of worldwide cyber attacks, which calculates hundreds per minute.]

[Figure 1 | Norse Corporation produces a real-time map of worldwide cyber attacks, which calculates hundreds per minute.]

This brief exercise demonstrates a couple of things: 1) the quantity and speed of cyber threats that can be used to attack vulnerable, safety-critical industrial systems; and 2) the growing need for data scientists and security analysts1, as well as tools and technologies to support them as the Internet of Things (IoT) expands. According to information from the U.S. Bureau of Labor Statistics, 82,900 information security analysts were employed in 2014, a figure projected to grow by 18 percent through 20242. However, as the number of connected devices grows into the billions, will roughly 100,000 security professionals be sufficient to offset the majority of cyber attacks targeted at connected industry?

Consider this question in the context of a single analyst reviewing security logs of 1,000 connected machines at random. Assume that 95 percent of the logs ingested by the operator’s security information and event management (SIEM) system conform to normal operation, while the remaining 5 percent of logs are suspicious (containing evidence of potential intrusions or violations). This equates to a total of 50 potential security threats in the entire collection, but also implies that the analyst will investigate 20 logs on average before encountering an actual threat, and need to repeat this log-checking process 50 times before encountering all threats (again, on average). If one of the 50 potential threats includes sophisticated malware such as Stuxnet or Duqu designed to commandeer the operation of cyber-physical industrial control systems or wipe hard drives, the analyst may not be able to intervene in time to protect millions of dollars worth of equipment or IP. Meanwhile, events continue to occur for all devices connected to the network.

[Figure 2 | Assuming that five percent of security logs contain actual threats, security analysts are forced to manually review as many logs as possible to increase the probability of detecting said threats.]

The lesson here on the state of today’s cyber defense work force should be clear to operators of safety-critical infrastructure, the security industry, and the IoT at large. But the prior examples also prompt a secondary realization that the signature-based methodologies used by firewalls to authenticate packets crossing the network, as well as local antivirus software used to prevent malware from launching on a device, are no longer sufficient security technologies by themselves in today’s cyber-physical world. Both Stuxnet and Duqu leveraged stolen or abused keys to gain access to target systems, and with digital signature libraries constantly in flux, it is difficult, if not impossible, for signature-based security systems and the analysts that rely on them to consistently mitigate threats.

To augment security teams, players in the Industrial Internet need to permit security professionals to quickly evaluate potential threats by embedding an “engineer, or at least that level of intelligence, in every machine,” says Amir Husain, Founder and CEO of SparkCognition.

“Embed a brain [into the system] that is the equivalent of a high-end mechanical engineer that can monitor the system,” Husain says. “If you visualize it that way, it’s no longer about what virus, whether it’s called Stuxnet, whether it’s called Flame, or whether it’s called a third name, the point is that you are monitoring the symptoms and the entire system out of band. Regardless of how the threat got in, you’re out of band of the IT network and you’re looking at the typical behavior of the system and saying, ‘Hey, the level of vibration or the speed at which this motor is running or the harmonics just don’t look good, and I’m predicting that this device will fail imminently. The gradient of that remaining, useful life curve is so sharp that this cannot be just normal wear and tear.’ “

“We’re talking about embedding a brain, a specialist’s brain, with every system and having the ability to evolve that brain to the custom needs of every individual device. When we talk about dynamically evolving models of behavior and having the capabilities for these models to learn and adapt to the specific asset over time, that’s really what we’re talking about.”

Genetic competition feeds intelligent machines

Founded in 2013, SparkCognition is an artificial intelligence (AI) and machine learning firm that develops algorithms and decision-making models that automate the creation of cognitive, intelligent systems. Based on technology whereby structured, semi-structured, and unstructured data is acquired and mined (see Sidebar 1), SparkCognition’s SparkSecure platform layers cognitive intelligence into traditional security solutions to permit the detection of zero-day attacks (signature-less threats), monitoring of threat behavior to predict potential future attacks, and efficiency scaling of security teams by analyzing and sorting log data before prioritizing those records for analyst review. These capabilities can be brought down to the individual system level, which is made possible by elastic machine learning models generated through a process known as ensembling.

Sidebar | Structured data ingress for cognitive and intelligent systems

An often overlooked challenge in the worlds of Big Data and the Industrial Internet is the collection of data from sensor devices themselves in a manner that is ingestible by cloud/IT backends. For example, inputs could include structured (bi-nary), semi-structured (part binary, part text), or unstructured (text-heavy) data, making it difficult for other machines, and even cognitively intelligent platforms, to interpret.

To ensure unified data capture across a diverse range of con-nected devices for its SparkSecure and SparkPredict (for data analytics and predictive maintenance) offerings, SparkCogni-tion has partnered with National Instruments, creator of the technical data management streaming (TDMS) file format. Unlike other file formats such as ASCII and XML that empha-size application planning, software architecture, or system design and leave storage decisions to the organizational proc-esses of operators, TDMS was initially architected for storing test and measurement data in a highly structured, scalable, streamable, and exchangeable way to prevent information silos from occurring on isolated machines. According to Jamie Smith, Director of Embedded Systems at National Instru-ments, this “open, documented standard to efficiently organize and analyze data” is critical to data acquisition systems, as well as the prognostics and machine learning systems they feed.

“TDMS provides both binary and metadata to document the data set for additional analysis and provide efficient storage and transport across the network,” Smith says. “The TDMS file format is used to organize and analyze engineering design data, test data, and analog operational data. Companies are using this format in conjunction with our technical data analy-sis tool DIAdem and InsightCM enterprise to improve overall quality, reduce maintenance cost, and increase uptime.”

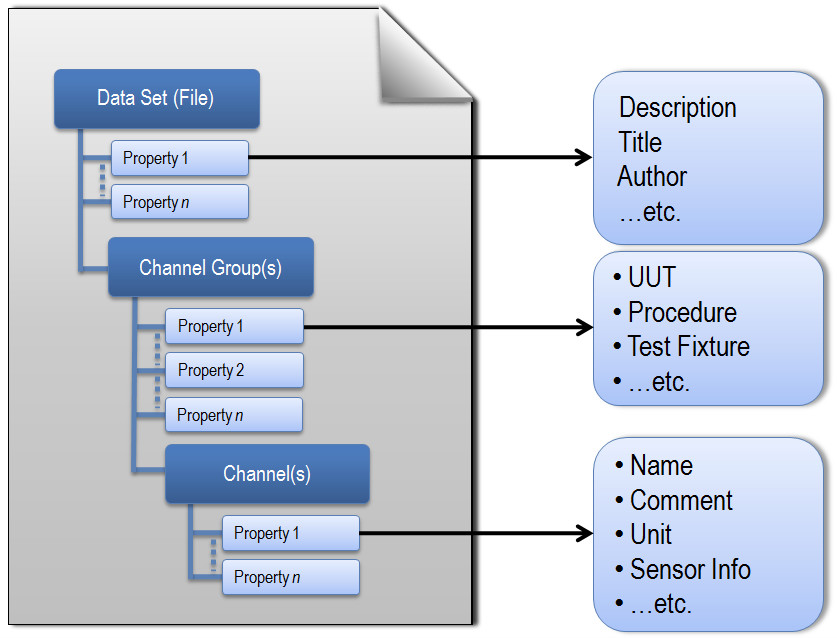

TDMS files are hierarchically structured into three distinct classifications: File, Group, and Channel. As seen in the figure below, this model allows for descriptive information to be included in the file along with other data, so that data sets can be easily documented and scaled as application or system requirements evolve.

“This data format is used with several machine learning and prognostics offerings, including NI’s machine learning tool kit, Watchdog Agent (whose prognostics were developed in collaboration with the Center for Intelligent Maintenance Sys-tems), SparkCognition’s prognostics tools, and has been inte-grated into IBM’s Bluemix as part of the Industrial Internet Consortium Predictive Maintenance Testbed hosted by Na-tional Instruments,” he continues. “Because all of these tools can ingest TDMS, you can separate data acquisition from your analytics choices to allow flexibility when choosing an analyt-ics tools offering.”

For more information, access a TDMS white paper at www.ni.com/white-paper/3727/en.

Given the various schools of thought in the AI community around model creation – including neural networks, Bayesian statistics, hidden Markov models (HMMs), natural language processing, and support vector machines (SVMs) – ensembling provides a multi-disciplinary approach to cognitive modeling that combines various machine learning techniques in somewhat of an automated genetic competition to produce the best result for a given machine serving a specific purpose. This strategy enables the best possible solution for cognitive systems (which have traditionally been limited to learning in a brittle if/then/else-type fashion), and also allows model development to occur at speeds exponentially faster than possible if built by human engineers. To describe the ensembling process, Husain imagines four engineers in a room working to solve a complex problem.

“Let’s say you come from an electrical engineering background and I come from a software engineering background and Nick comes from a mechanical engineering background and John comes from a structural engineering background,” he says. “We have a problem that we collectively put our minds to and we all bring our perspectives to it.

“Often what happens is that it’s not that one person is absolutely right and three others are absolutely wrong, but that there’s some mixture of value that can be put together to create something that’s composite, that’s better than any one idea alone,” he continues. “SparkCognition uses automated algorithms to create models using different techniques, as, for example, you might want to model something as an HMM in a deep neural network and through a Bayesian filter, and have all of this on an SVM. Ensembling, which is the combination of various different solutions, is a form of competition, and usually we employ genetic techniques to induce competition between auto-assembled and auto-created models. It’s about taking every tool at your disposal and having an automated way to apply all those tools to learn from the application as to what was effective and what was not, and then creating a composite solution that solves the problem in the best way possible.

“That’s really what the universe has been doing for the last 13.8 billion years, and finally it dawned on the human mind. That’s really the approach, but usually we take a little less than 13.8 billion years to do it,” Husain adds.

To accelerate the development of precision models for cognitive machines, SparkCognition maintains an active network of systems in order to collect data of relevance to the industries it serves, which can then be used to augment device data gathered from a system itself. For example, security information gathered since the company’s inception can be used to supplement data from a newly deployed machine, thus accelerating return on investment (ROI) as the algorithm and model development process does not have to start from scratch. For industrial customers, trained models can be operational in a timeframe spanning hours to a few days.

Artificial Intelligence: May the disruption commence

However, getting trained models operational is only part of the job, as the model generated for a particular system is only as good as the data available to it at the time of its creation. Actual intelligence requires the ability to account for new logs and sensor data generated by the system (as well as human input received) well after initial model deployment and into the future. For this purpose, SparkCognition’s “Cognitive Fingerprinting” algorithms are used to analyze diverse data sets and recognize patterns forming in real time, for example by predicting a change in system state. A partnership with IBM also facilitates integration with the Watson platform so that Watson can provide remediation responses in the event of a system anomaly.

Considering these capabilities, in addition to working relationships with embedded data acquisition vendors like National Instruments that aid in unified data ingress, the end-to-end nature of emerging AI ecosystems such as SparkCognition’s suggests that systems are in a position to operate completely autonomously, with minimal need for human intervention. But, how realistic is that today? Husain explains.

“SparkSecure, out of the box, is able to act entirely autonomously,” he says. “SparkSecure can be deployed in an environment where it can sense an attack, it can determine the likelihood of this being a real attack versus a false positive, and then can take action, which includes things like making a change to a firewall, disabling a user account, killing a service, and so on. But the reality is that, for non-technical reasons, most of our customers elect to have these actions vetted by an incident response professional before they are implemented.

“This is new technology. Think about the self-driving car. Google has already shown that the self-driving car can do better than most human drivers, but there is a reticence and a nervousness around simply going ahead and implementing that as a technology that’s common,” Husain continues. “[AI and machine learning] are similar. These systems work really, really, really well in their current form, and SparkSecure already augments human security professionals and allows one person to look at more data than they could ever have looked at on their own, and it proposes the actions as well. But when it comes to actually making the change, our customers are at the point where they want that human validation, which I completely understand. But, ultimately, at some point in the future, can such a system be autonomous? Of course.”

References

1. Vijayan, Jaikumar. “Demand for IT Security Experts Outstrips Supply.” Computerworld. 2013. http://www.computerworld.com/article/2495985/it-careers/demand-for-it-security-experts-outstrips-supply.html.

2. “Summary.” U.S. Bureau of Labor Statistics. http://www.bls.gov/ooh/computer-and-information-technology/information-security-analysts.htm.

![Assuming that five percent of security logs contain actual threats, security analysts are forced to manually review as many logs as possible to increase the probability of detecting said threats.]](https://data.militaryembedded.com/uploads/articles/wp/5511/576331ca9db21-Figure+2.png)